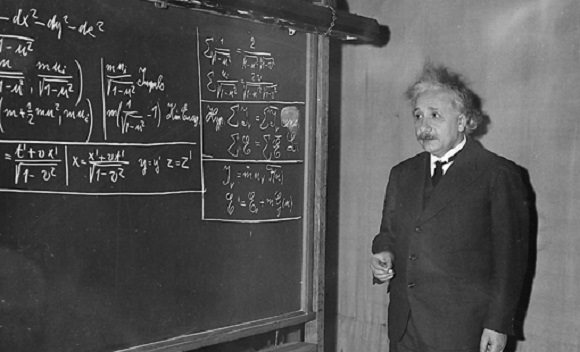

According to the Standard Model of Particle Physics, the Universe is governed by four fundamental forces: electromagnetism, the weak nuclear force, the strong nuclear force, and gravity. Whereas the first three are described by Quantum Mechanics, gravity is described by Einstein’s Theory of General Relativity. Surprisingly, gravity is the one that presents the biggest challenges to physicists. While the theory accurately describes how gravity works for planets, stars, galaxies, and clusters, it does not apply perfectly at all scales.

While General Relativity has been validated repeatedly over the past century (starting with the Eddington Eclipse Experiment in 1919), gaps still appear when scientists try to apply it at the quantum scale and to the Universe as a whole. According to a new study led by Simon Fraser University, an international team of researchers tested General Relativity on the largest of scales and concluded that it might need a tweak or two. This method could help scientists to resolve some of the biggest mysteries facing astrophysicists and cosmologists today.

The team included researchers from Simon Fraser, the Institute of Cosmology and Gravitation at the University of Portsmouth, the Center for Particle Cosmology at the University of Pennsylvania, the Osservatorio Astronomico di Roma, the UAM-CSIC Institute of Theoretical Physics, Leiden University’s Institute Lorentz, and the Chinese Academy of Sciences (CAS). Their results appeared in a paper titled “Imprints of cosmological tensions in reconstructed gravity,” recently published in Nature Astronomy.

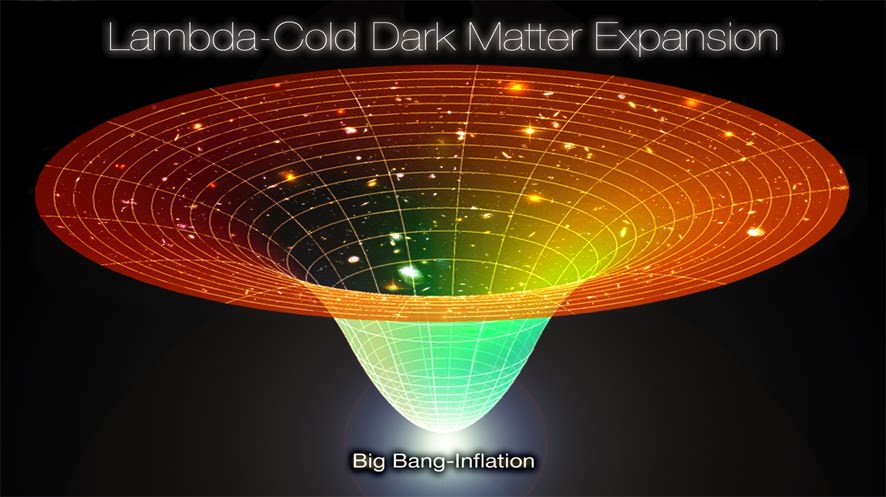

According to Einstein’s field equations for GR, the Universe was not static and had to be in a state of expansion (otherwise, the force of gravity would cause it to contract). While Einstein resisted this idea initially and tried to propose a mysterious force that held the Universe in equilibrium (his “Cosmological Constant”), the observations of Edwin Hubble in the 1920s showed that the Universe is expanding. Quantum theory also predicts that the vacuum of space is filled with energy that goes unnoticed because conventional methods can only measure changes in energy (rather than its total amount).

By the 1990s, new observatories like the Hubble Space Telescope (HST) pushed the boundaries of astronomy and cosmology. Thanks to surveys like the Hubble Deep Fields (HDF), astronomers could see objects as they appeared over 13 billion light-years (or less than a billion years after the Big Bang). To their surprise, they discovered that for the past 4 billion years, the rate of expansion has been accelerating. This led to what is known as “the old cosmological constant problem,” where gravity is weaker on cosmological scales, or a mysterious force is driving cosmic expansion.

Lead-author Levon Pogosian (Professor of Physics, Simon Fraser University) and co-author Kazuya Koyama (Professor of Cosmology, University of Portsmouth) summarized the issue in a recent article via The Conversation. As they explained it, the cosmological constant problem comes down to a single question with drastic implications:

“[W]hether the vacuum energy actually gravitates – exerting a gravitational force and changing the expansion of the universe. If yes, then why is its gravity so much weaker than predicted? If the vacuum does not gravitate at all, what is causing the cosmic acceleration? We don’t know what dark energy is, but we need to assume it exists in order to explain the universe’s expansion. Similarly, we also need to assume there is a type of invisible matter presence, dubbed dark matter, to explain how galaxies and clusters evolved to be the way we observe them today.”

The existence of Dark Energy is part of the standard cosmological theory known as the Lambda Cold Dark Matter (LCDM) model – where Lambda represents the Cosmological Constant/Dark Energy. According to this model, the mass-energy density of the Universe consists of 70% Dark Energy, 25% Dark Matter, and 5% normal (visible or “luminous”) matter. While this model has successfully matched observations gathered by cosmologists over the past 20 years, it assumes that most of the Universe is made up of undetectable forces.

Hence why some physicists have ventured that GR may need some modifications to account to explain the Universe as a whole. Moreover, a few years ago, astronomers noted that measuring the rate of cosmic expansion in different ways produced different values. This problem, explained Pogosian and Koyama, is known as the Hubble tension:

“The disagreement, or tension, is between two values of the Hubble Constant. One is the number predicted by the LCDM cosmological model, which has been developed to match the light left over from the Big Bang (the Cosmic Microwave Background radiation). The other is the expansion rate measured by observing exploding stars known as supernovas in distant galaxies.”

Many theoretical ideas have been proposed for modifying the LCDM model to explain the Hubble Tension. Among them are alternative gravity theories, such as Modified Newtonian Dynamics (MOND), a modified take on Newton’s Law of Universal Gravitation that does away with the existence of Dark Matter. For over a century, astronomers have tested GR by observing how the curvature of spacetime is altered in the presence of gravitational fields. These tests have become particularly extreme in recent decades, which include how Supermassive Black Holes (SMBHs) affect orbiting stars or how gravitational lenses amplify and alter the passage of light.

For the sake of their study, Pogosian and his colleagues used a statistic model known as Bayesian inference, which is used to calculate the probability of a theorem as more data is introduced. From there, the team simulated cosmic expansion based on three parameters: the CMB data from the ESA’s Planck satellite, supernova and galaxy catalogs like the Sloan Digital Sky Survey (SDSS) and Dark Energy Survey (DES), and the predictions of the LCDM model.

“Together with a team of cosmologists, we put the basic laws of general relativity to test,” said Pogosian and Koyama. “We also explored whether modifying Einstein’s theory could help resolve some of the open problems of cosmology, such as the Hubble tension. To determine if GR is correct on the largest of scales, we set out, for the first time, to simultaneously investigate three aspects of it. These were the expansion of the Universe, the effects of gravity on light, and the effects of gravity on matter.”

Their results showed a few inconsistencies with Einstein’s predictions, though they had a rather low statistical significance. They also found that resolving the Hubble tension problem was difficult simply by modifying the theory of gravity, suggesting that an additional force may be required or that there are errors in the data. If the former is true, said Pogosian and Koyama, then it is possible this force was present during the early Universe (ca. 370,000 years after the Big Bang) when protons and electrons first combined to create hydrogen.

Several possibilities have been advanced in recent years, ranging from a special form of Dark Matter, an early type of Dark Energy, or primordial magnetic fields. In any case, this latest study indicates that there is future research to be done that may lead to a revision of the most widely-accepted cosmological model. Said Pogosian and Koyama:

“[O]ur study has demonstrated that it is possible to test the validity of general relativity over cosmological distances using observational data. While we haven’t yet solved the Hubble problem, we will have a lot more data from new probes in a few years. This means that we will be able to use these statistical methods to continue tweaking general relativity, exploring the limits of modifications, to pave the way to resolving some of the open challenges in cosmology.”

Further Reading: The Conservation, Nature Astronomy

The post Einstein's Predictions for Gravity Have Been Tested at the Largest Possible Scale appeared first on Universe Today.

No comments:

Post a Comment