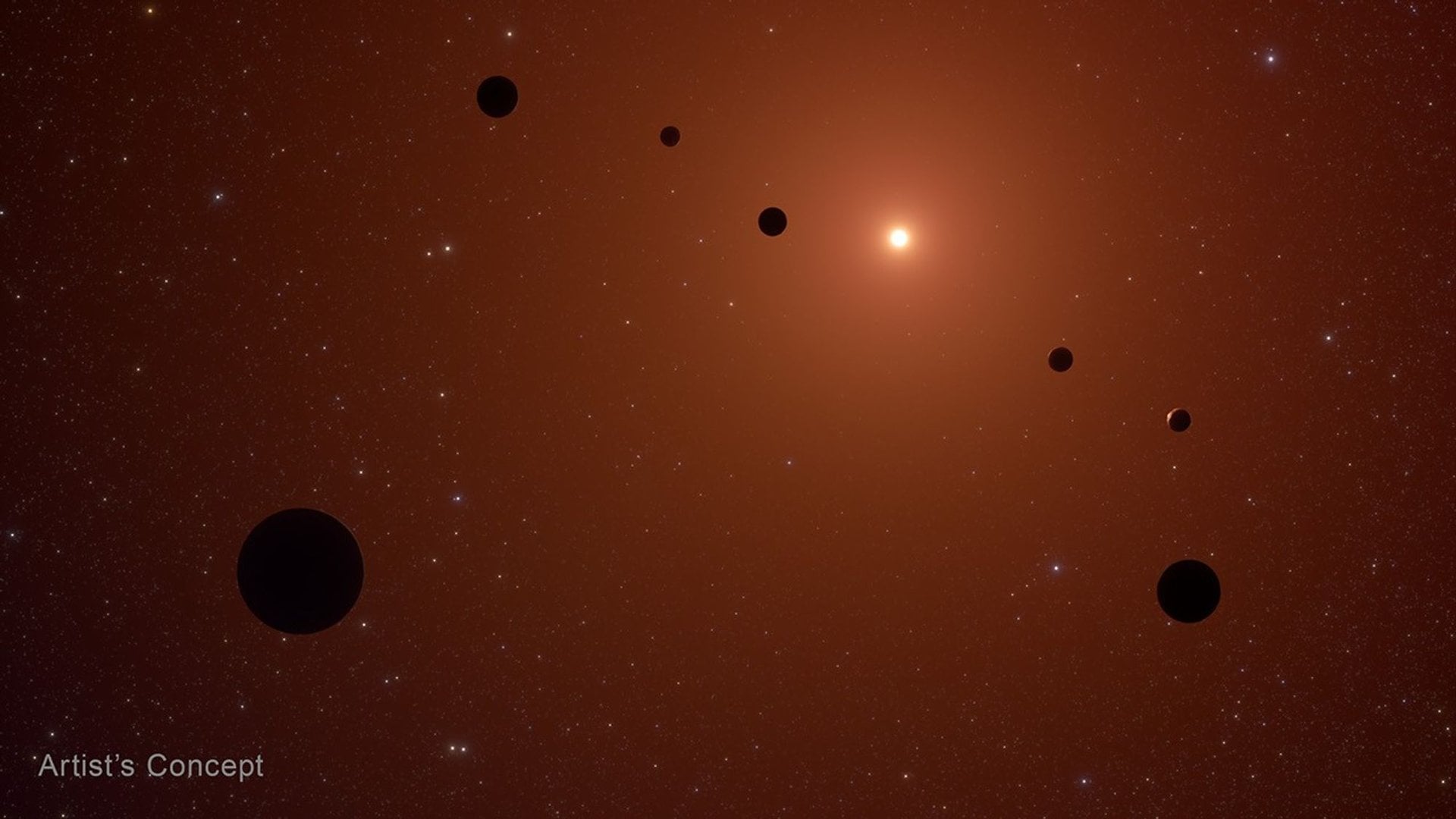

Anyone familiar with the search for alien life will have heard of the “Goldilocks Zone” around a star. This is defined as the orbital band where the temperature is just right for liquid water to pool on a rocky planet’s surface - a good approximation for what we thought of as the early conditions for life on Earth. But what happens if that life doesn’t stay on an Earth analog? If they, like we, start to move towards their neighboring planets, the idea of a habitable zone becomes much more complicated. A new paper from Dr. Caleb Scharf of the NASA Ames Research Center, and one of the agency’s premier astrobiologists, tries to account for this possibility by introducing the framework of an Interplanetary Habitable Zone (IHZ).